We are happy that our paper titled “Exploring Human Perception-Aligned Perceptual Hashing” was accepted at the IEEE Consumer Electronics Magazine (IF 3.7). This work was done in collaboration with the National Institute of Criminalistics and Criminology, and was performed in the context of the master thesis of Jelle De Geest. This work is an extended version of our IEEE GEM 2024 paper “Perceptual Hashing Using Pretrained Vision Transformers”, which won a Best Paper Award. This extended version provides a more extensive evaluation and presents new experimental results on the detection of visually similar images with a different acquisition origin.

The widespread sharing of images has led to challenges in controlling the spread of harmful content in consumer devices, particularly Child Sexual Abuse Material (CSAM). Perceptual hashing offers a solution by enabling the fast detection of blacklisted images through compact representations of visual content.

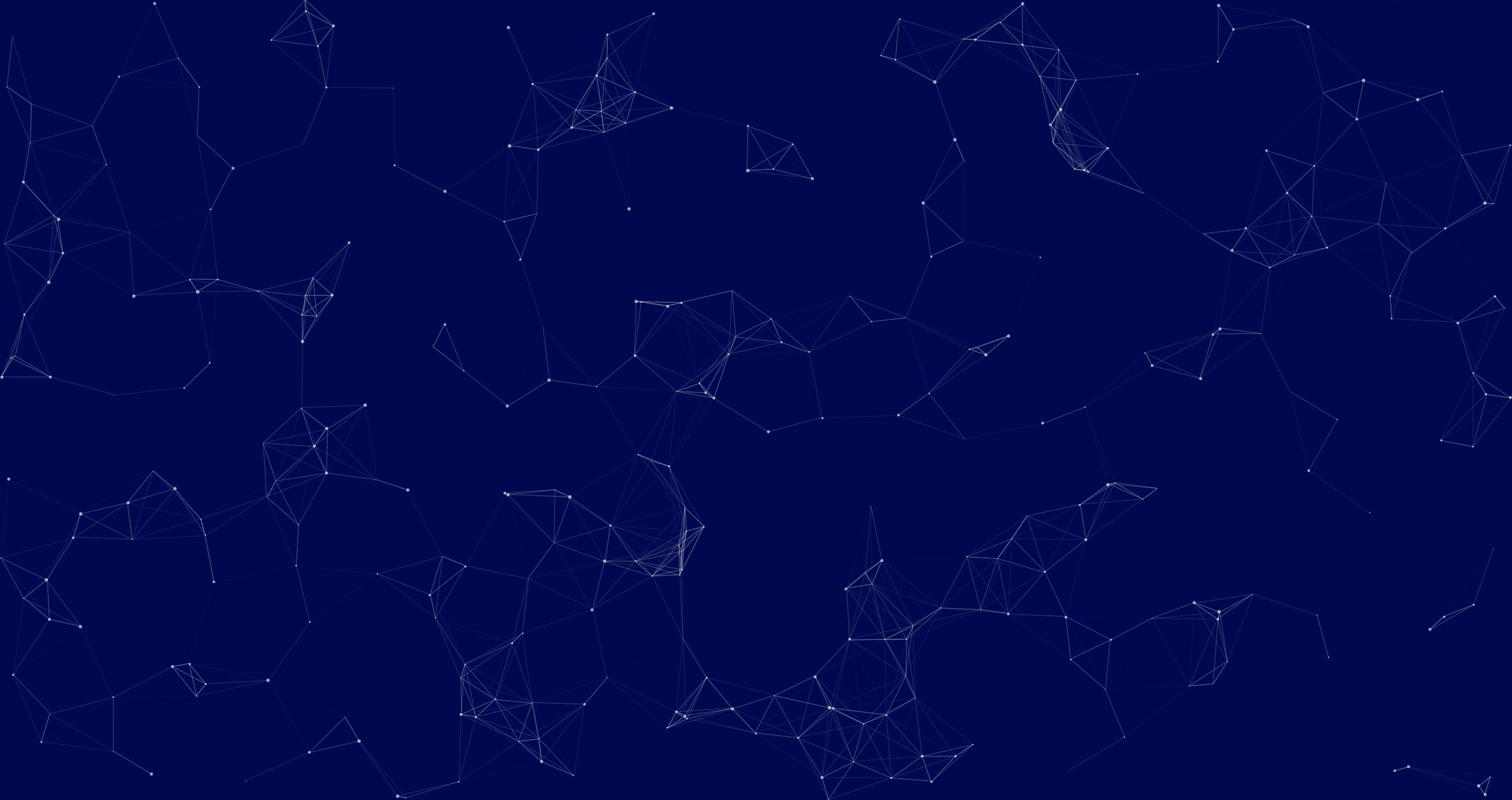

However, automatically detecting (near-)duplicate images in overwhelming volumes of data is challenging due to the limitations of traditional perceptual hashing methods. For example, existing methods can fail to detect images with minor modifications, specifically spatial modifications. Additionally, they were often designed to find images derived from the same original image, and hence are incapable of recognizing visually similar images that originate from a different acquisition origin. For example, the figure below shows false positive detections for the traditional pHash method. The false positive images share the same structure, but not the same content (as we would expect humans to make mistakes). Hence, traditional methods are not aligned with human perception.

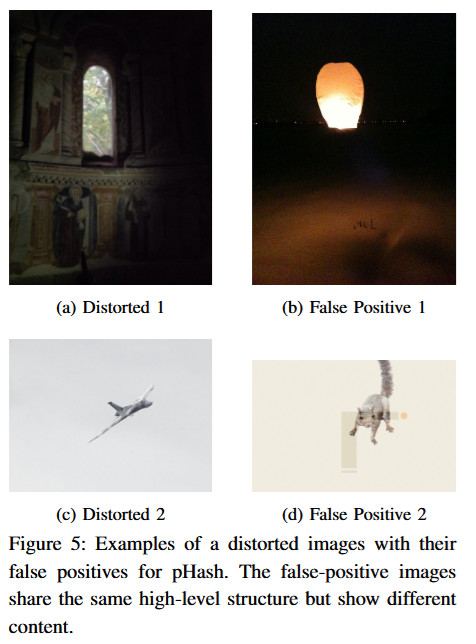

This study proposes ViTHash: using Vision Transformers (ViTs), specifically the CLIP model, to enhance perceptual hashing, better aligning with human perception. The proposed ViTHash method is compared against traditional perceptual hashing methods like pHash, dHash, and PDQHash. Quantitative results show that ViTHash outperforms traditional methods in handling spatial distortions such as rotation and mirroring, although it is less robust to visual quality distortions like blurring and compression. Qualitative analysis reveals that ViTHash aligns more closely with human perception. For example, the figure below shows false positive detections for the proposed ViTHash method. The false positive images share the same content, hence better aligning with human perception.

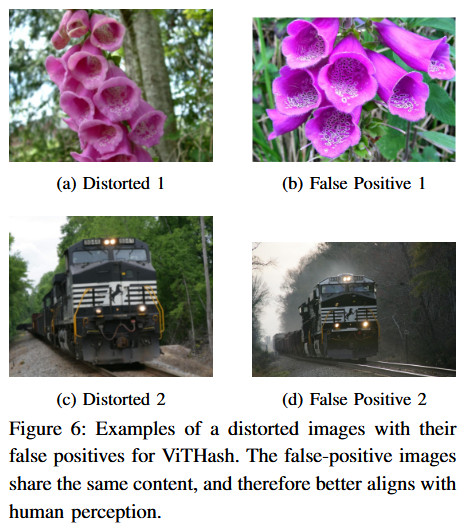

Additionally, ViTHash is capable of identifying visually similar images, even when they originate from different acquisition origins. For example, the figure below shows an target image, and the two most similar images for both the traditional pHash and the proposed ViTHash. The pHash closest matches show completely different content, whereas the ViTHash matches align with the target image (i.e., an insect with wings). This again demonstrates that ViTHash better aligns with human perception.

These findings demonstrate that ViTHash offers significant potential for applications requiring nuanced image similarity assessments, providing a valuable tool to enhance the detection of illicit content in consumer electronics devices, and support law enforcement efforts.

The source code of ViTHash is available on GitHub.com.

The source code of ViTHash is available on GitHub.com.

Green Open Access Paper download: Exploring Human Perception-Aligned Perceptual Hashing