We are happy that our paper titled “Perceptual Hashing Using Pretrained Vision Transformers” was accepted at the IEEE CTSoc Conference on Gaming, Entertainment, and Media (GEM) 2024. This work was done in collaboration with the National Institute of Criminalistics and Criminology. We are also proud that this paper won a Best Paper Award!

The rapid evolution of digital image circulation has necessitated robust techniques for image identification and comparison, particularly for sensitive applications such as detecting Child Sexual Abuse Material (CSAM) and preventing the spread of harmful content online.

Traditional perceptual hashing methods, while useful, fall short when exposed to some common image transformations, or when images are doctored to avoid detection, rendering them ineffective for nuanced comparisons.

Addressing this challenge, this paper introduces a novel pretrained vision transformer artificial intelligence (AI) model approach that enhances the robustness and accuracy of perceptual hashing. Leveraging a pretrained Vision Transformer (ViT-L/14), our approach integrates visual and textual data processing to generate feature arrays that represent perceptual image hashes.

Through a comprehensive evaluation using a dataset of 50,000 images, we demonstrate that our method offers significant improvements in detecting similarities for certain complex image transformations, aligning more closely with human visual perception than conventional methods. While our method presents certain initial drawbacks such as larger hash sizes and high computational complexity, its ability to better handle perceptual nuances presents a forward step in the realm of image forensics.

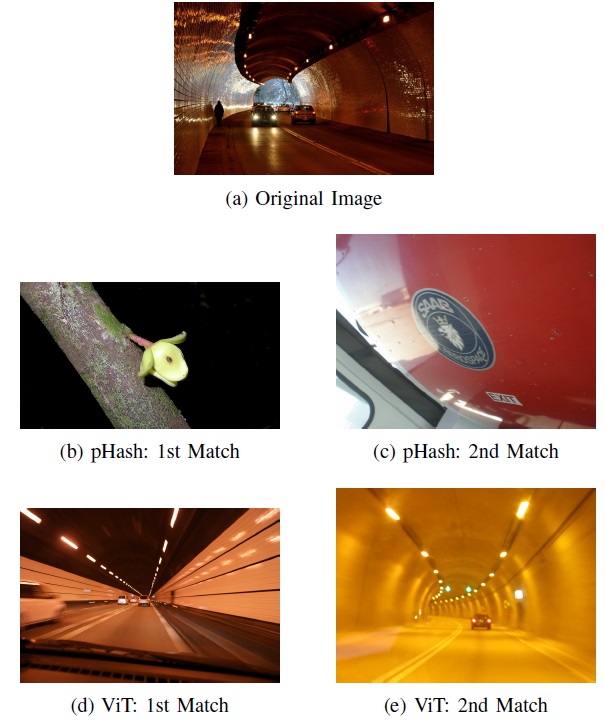

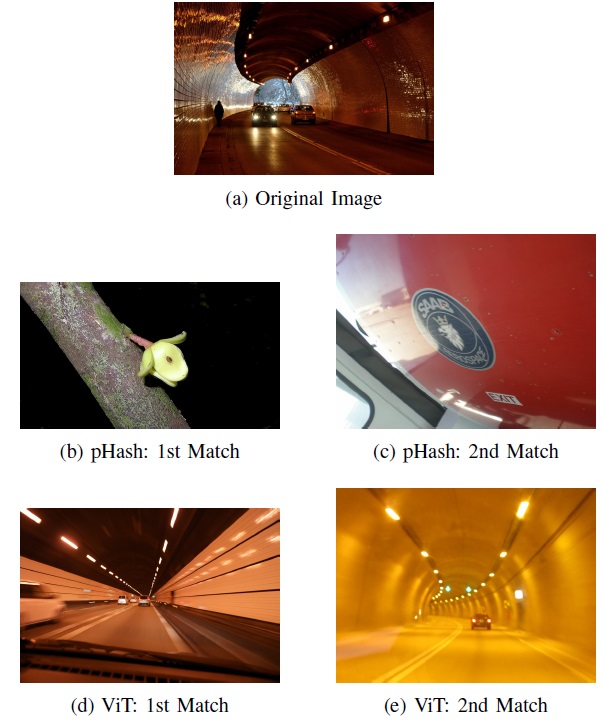

When there is no image match present in the database, the closest matches with our proposed ViT method closely align with human visual perception. For example, an image of a car in a tunnel results in closest matches with other images made in tunnels (using ViT). In contrast, the closest matches using a conventional method such as pHash show entirely different content. For example, an image of a car in a tunnel results in closest matches with a branch or a close-up of a car brand (using pHash).

When there is no image match present in the database, the closest matches with our proposed ViT method closely align with human visual perception. For example, an image of a car in a tunnel results in closest matches with other images made in tunnels (using ViT). In contrast, the closest matches using a conventional method such as pHash show entirely different content. For example, an image of a car in a tunnel results in closest matches with a branch or a close-up of a car brand (using pHash).

The potential applications of this research extend to law enforcement, digital media management, and the broader domain of content verification, setting the stage for more secure and efficient digital content analysis.

Paper download: Perceptual Hashing Using Pretrained Vision Transformers