Our paper titled “TGIF: Text-Guided Inpainting Forgery Dataset” was accepted at the IEEE Int. Workshop on Information Forensics & Security (WIFS) 2024. This work was done in collaboration with Symeon (Akis) Papadopoulos & Dimitris Karageorgiou from the Media Analysis, Verification and Retrieval Group (MeVer) of the Centre for Research and Technology Hellas (CERTH), Information Technologies Institute (ITI), in Thessaloniki, Greece. 🇬🇷

Together, we created the TGIF Dataset - images manipulated with Text-Guided Inpainting Forgeries to support the training and evaluation of image forgery localization (IFL) & synthetic image detection (SID) methods. The TGIF dataset has ~75k forged images, using Stable Diffusion 2, SDXL, and Adobe Firefly. We also benchmark several state-of-the-art IFL and SID methods on TGIF. Using the dataset and benchmark, we discovered new insights into the impact of Generative AI on Multimedia Forensics. These insights are illustrated with the example below.

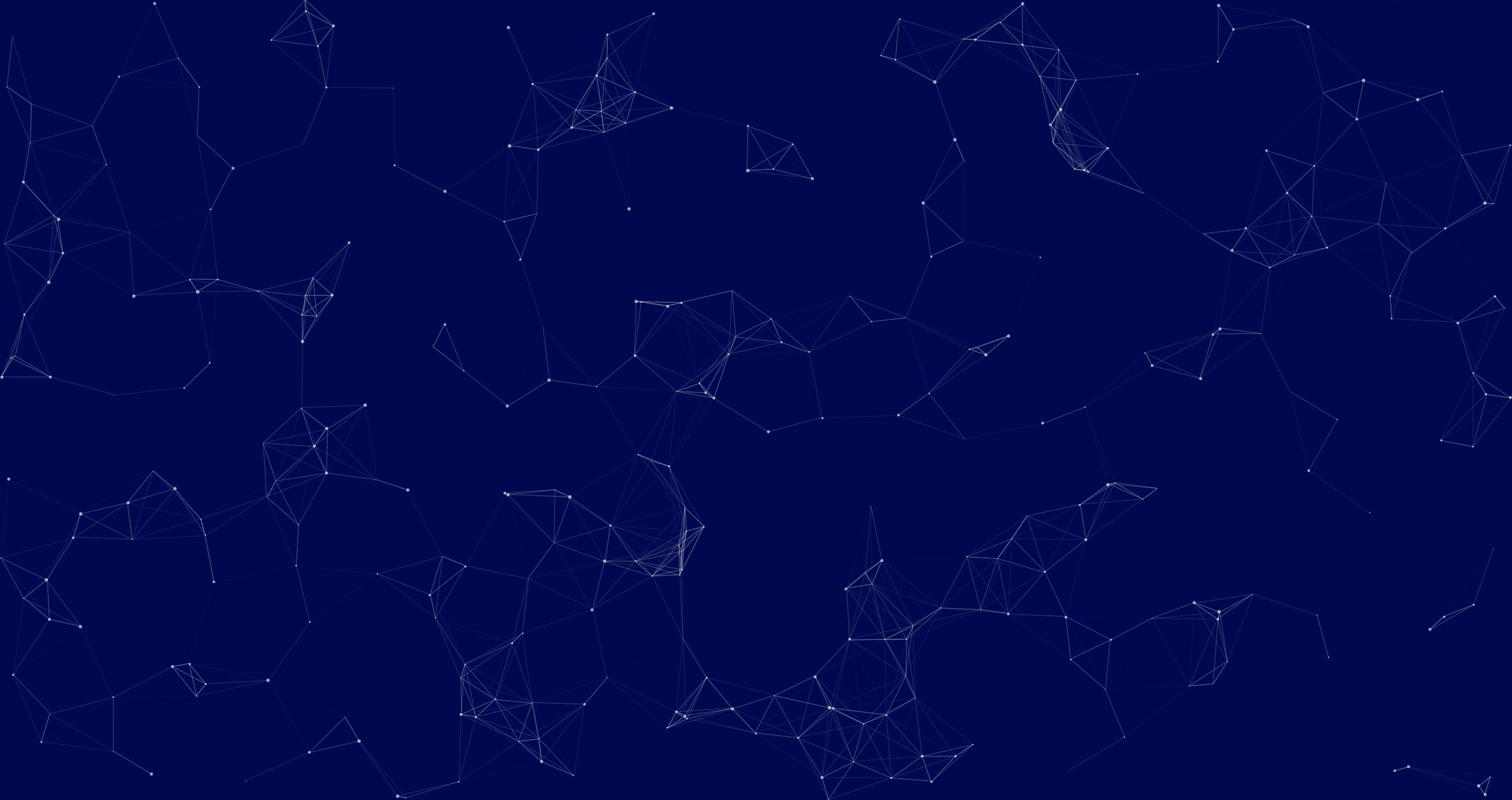

🏔️🎿 The picture below shows Dimitris Karageorgiou from MeVer & Hannes Mareen from IDLab on Greece’s iconic Mt. Athos. They appear to be wearing skis, but were they really? 🧐

Dimitris & Hannes with skis on top of Mt. Athos, Greece. Were they really wearing skis?

Dimitris & Hannes with skis on top of Mt. Athos, Greece. Were they really wearing skis?

⚠️ No, they were not actually wearing skis. The skis were added using GenAI 🤖 – by selecting the region around the feet, and using the prompt “skis”. Under the hood, text-guided inpainting works similarly as GenAI methods that create fully synthetic images using a textual prompt. The difference is that the AI is told to only create new pixels in the selected area, and keep the rest of the image the same.

GenAI was used to add the skis. The rest of the picture is authentic.

GenAI was used to add the skis. The rest of the picture is authentic.

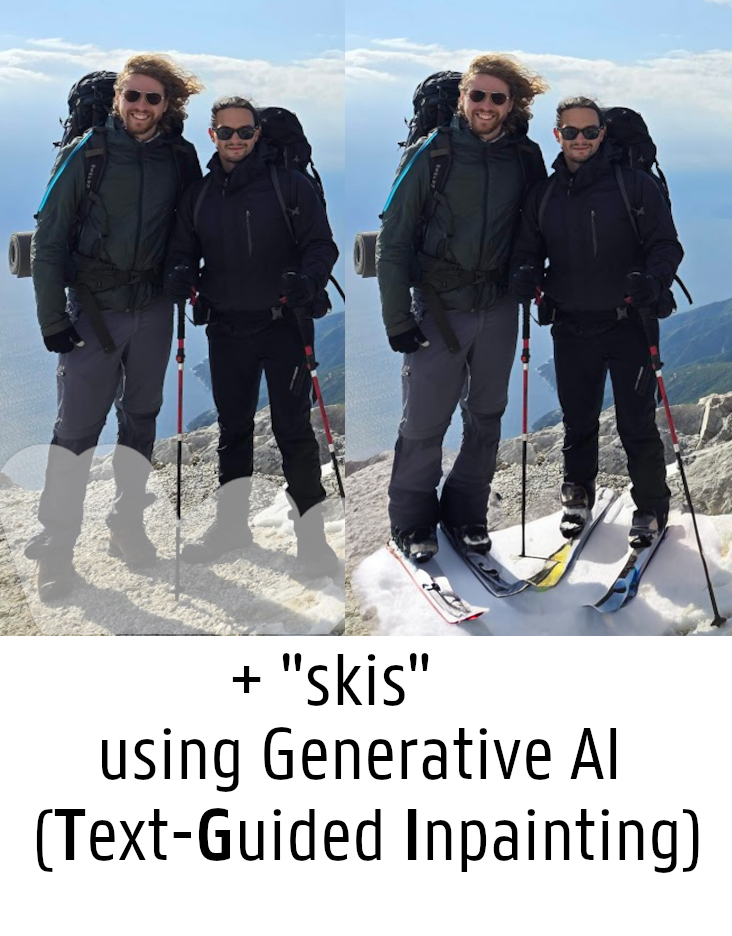

🤖🕰️ Older GenAI methods were limited to low resolutions (e.g., 512x512 pixels for Stable Diffusion 2). To inpaint higher-resolution images, we can take a 512x512px crop around the selected region and only give that part to the GenAI method. Afterwards, we splice ✂️ the selected region by copying-and-pasting it back into the high-res image. This is also how Generative Fill in Adobe Photoshop / Firefly works. ✨

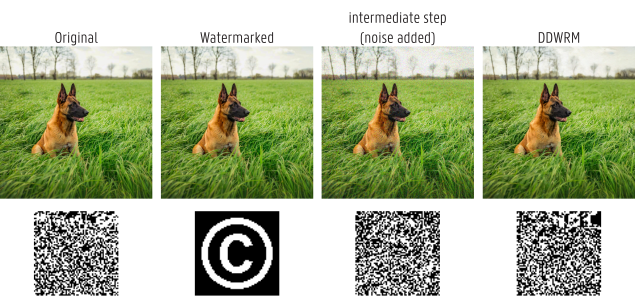

⚠️✂️ The spliced, inpainted area can be detected and localized by Image Forgery Localization (IFL) methods. For example, TruFor nicely highlights the spliced feet/ski area.

The Image Forgery Localization method ‘TruFor’ correctly highlights the inpainted area in the spliced image.

The Image Forgery Localization method ‘TruFor’ correctly highlights the inpainted area in the spliced image.

🤖🚀 New GenAI methods support higher resolutions (e.g., Stable Diffusion XL supports 1024x1024px). Therefore, we do not need to take a crop and later splice the inpainted region, anymore. Instead, we can give the full high-res image to the GenAI method. However, even when I only select the feet/ski region, the AI actually generates an entirely new picture. Although the rest of the picture appears to be the same, we can actually spot subtle differences when we zoom in. This picture is fully regenerated.

The original image (without skis) vs. the spliced image (where only the feet/ski area is new) vs. the fully regenerated image (where all pixels are regenerated, even though it’s hard to tell without zooming in).

The original image (without skis) vs. the spliced image (where only the feet/ski area is new) vs. the fully regenerated image (where all pixels are regenerated, even though it’s hard to tell without zooming in).

When zooming in on the images, we can find subtle differences (e.g., the shape of the sunglasses or the texture of the beard).

When zooming in on the images, we can find subtle differences (e.g., the shape of the sunglasses or the texture of the beard).

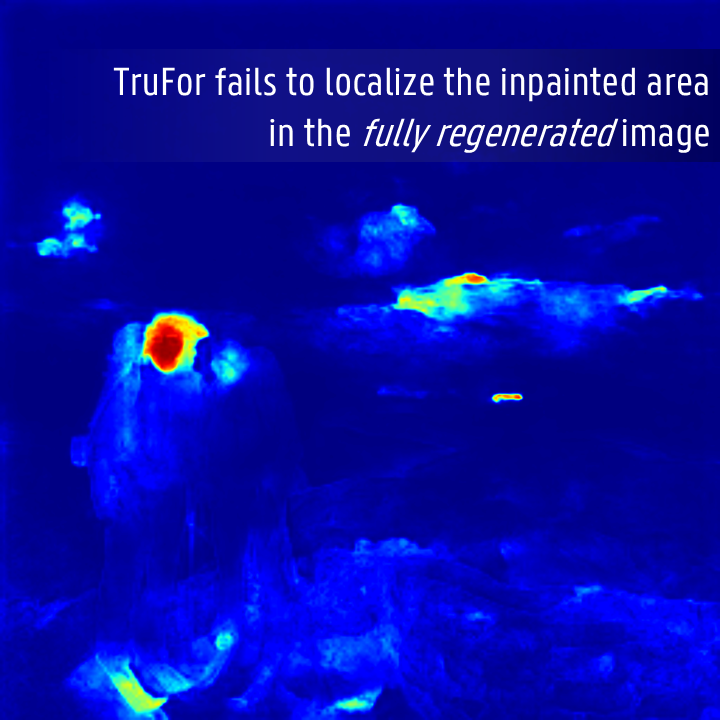

❌✂️ The fully regenerated image cannot be detected by IFL methods anymore. For example, TruFor highlights the wrong region and reports a low forgery score and confidence.

IFL methods such as TruFor do not work on fully regenerated images.

IFL methods such as TruFor do not work on fully regenerated images.

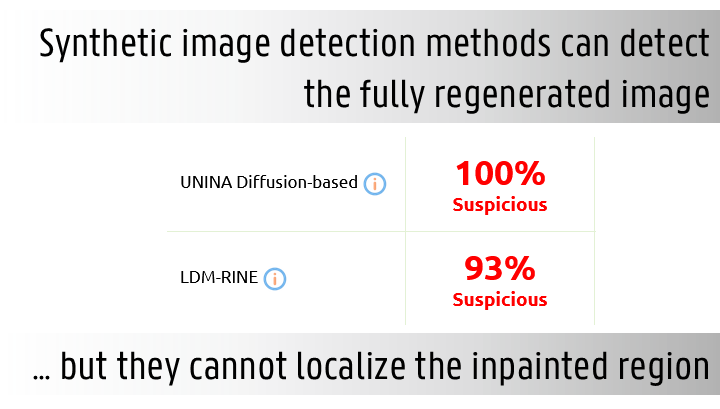

⚠️🤖 Fortunately, some Synthetic Image Detection (SID) methods can detect that the fully regenerated image is fake. But since these were developed to detect fully synthetic images, they cannot localize the manipulated area, though. They cannot tell us that only the skis were added. ❌✂️

SID methods can detect that fully regenerated images are synthetic. But they cannot localize the inpainted area in a heatmap.

SID methods can detect that fully regenerated images are synthetic. But they cannot localize the inpainted area in a heatmap.

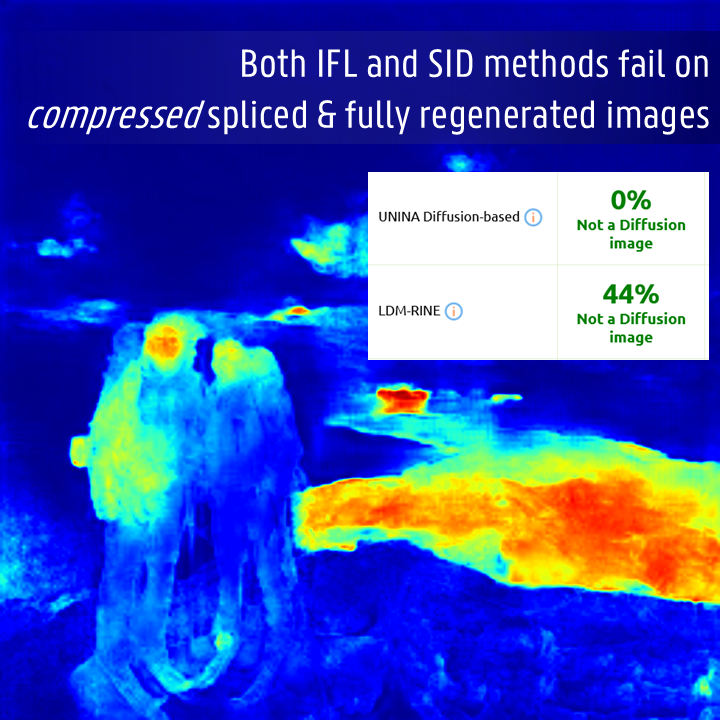

❌💾 Moreover, both IFL and SID methods fail when we compress the image to a lower quality, especially using WEBP (instead of JPEG). All images on social media are compressed. 🌐 Thus, this highlights how challenging it is to factcheck these, in practice.

Compressing the images to a lower quality removes all traces used by current IFL and SID methods, especially the newer WEBP compression.

Compressing the images to a lower quality removes all traces used by current IFL and SID methods, especially the newer WEBP compression.

🤔 Can our TGIF dataset be used to solve these challenges in future work?

The dataset & code of the TGIF Dataset is available on GitHub.com.

The dataset & code of the TGIF Dataset is available on GitHub.com.

Paper: TGIF: Text-Guided Inpainting Forgery Dataset

The paper was partly financed by the COM-PRESS project, which received subsidies from the Flemish government’s Department of Culture, Youth & Media (Departement Cultuur Jeugd & Media).