To finish the line-up, a third paper titled “360DIV: 360° Video Plus Depth for Fully Immersive VR Experiences” was accepted at the IEEE International Conference on Consumer Electronics (ICCE) 2023.

The work is presented at ICCE in Las Vegas, Nevada, USA between 6 and 8 January 2023.

360° cameras have become more accessible to the wide public, leading to an ever-growing mountain of 360° video content. Viewers at home can put on a Virtual Reality headset and watch videos of beautiful sceneres and events from all over the world. The ease of content creation and the fact that the result looks photorealistic are promising for the multimedia industry, which is striving for more and more immersive experiences.

However, since the 360° videos only show the environment from one point in 3D space, the viewer has to sit perfectly still as to not break the illusion. As soon as the user moves, the scenery will follow. In other words, 360° videos do not provide motion parallax or six-degrees-of-freedom (6DOF). This significantly lowers the quality of experience.

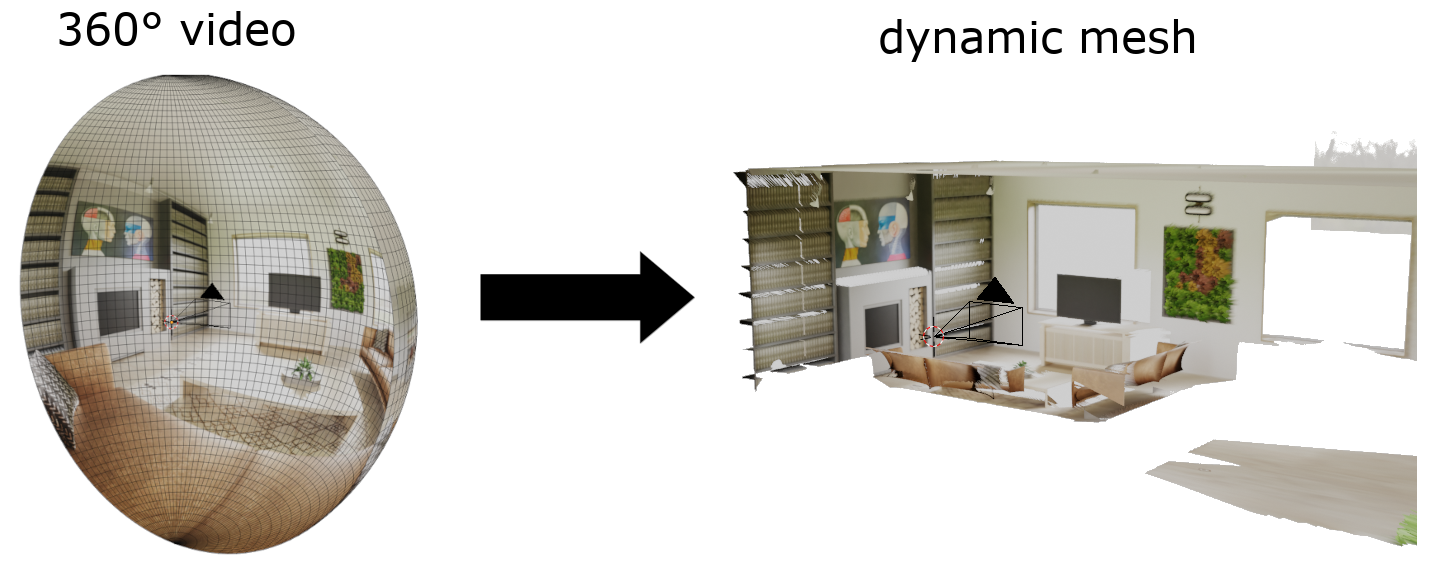

This paper introduces motion parallax by adding depth to the 360° video. Different depth map creation methods can be used, such as multi-view stereo, deep learning or using depth cameras. The depth map essentially gives information about the geometry of the captured scene.

Example conversion of (part of) a 360° video to a mesh (that changes over time) by determining the depth of each pixel in the video.

Example conversion of (part of) a 360° video to a mesh (that changes over time) by determining the depth of each pixel in the video.

The paper proposes a framework that automatically converts the 360° video and depth map to a dynamic, textured 3D mesh. Additionally, the framework builds a textured mesh that will take care of inpainting. The two dynamic meshes are rendered in real-time in VR.

The dark gray pixels (left) are parts of the scene that were not captured by the original 360° camera. They can be given a more suitable color through inpainting (right).

The dark gray pixels (left) are parts of the scene that were not captured by the original 360° camera. They can be given a more suitable color through inpainting (right).

The paper comes with a VR demo showcasing a living room scene. The user can switch between three scenarios: a monoscopic 360° video, a stereoscopic 360° video and the 3D meshes produced by the proposed framework. The goal is to compare the quality of experience between the three options. The last scenario is the only one with motion parallax, but if the viewer moves away too far from the initial position, the inpainting will become more noticeable. Different techniques were considered to create the depth map, but the achieved accuracy was not at the level necessary for this demo. Instead, the groundtruth depth map created from the computer-generated scene was used.

The proposed method of converting 360° videos to meshes also has the benefit that other objects and overlays can easily be added to the scene.

The demo is available: 360DIV demo.