We are pleased to announce that our paper, “Improved Deepfake Video Detection Using Convolutional Vision Transformer,” has been accepted for presentation at the IEEE CTSoc Conference on Gaming, Entertainment, and Media (GEM) 2024. This research was conducted in collaboration with Ghent University – imec, and Addis Ababa University.

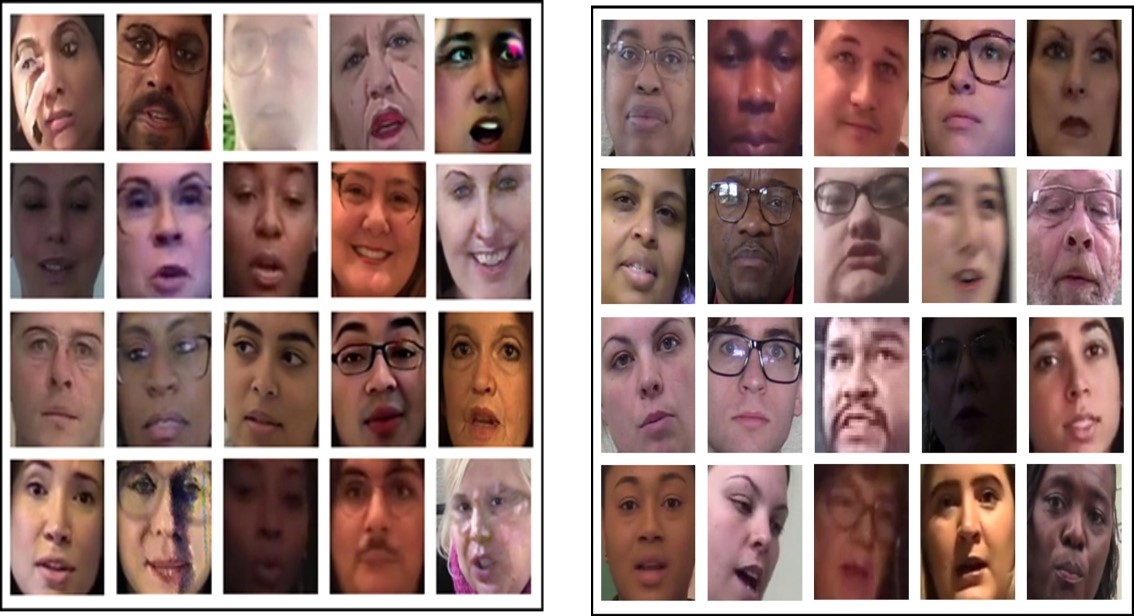

Deepfakes, which are hyper-realistic videos with manipulated, replaced, or forged faces, pose significant risks, including identity fraud, phishing, misinformation, and scams. Although various deep learning models have been developed to detect deepfake videos, they often fall short in accurately identifying deepfakes, especially those generated using advanced techniques.

In this work, we propose an improved method for deepfake video detection using the Convolutional Vision Transformer (CViT2). Our CViT2 model combines Convolutional Neural Networks (CNNs) and Vision Transformers to enhance the detection process by learning both local and global image features using an attention mechanism. The CViT2 architecture includes a CNN for feature extraction and a Vision Transformer for feature categorization.

We trained and evaluated our model using five datasets: Deepfake Detection Challenge Dataset (DFDC), FaceForensics++ (FF++), Celeb-DF v2, DeepfakeTIMIT, and TrustedMedia. CViT2 achieves high accuracy on the following test sets: 95% on DFDC, 94.8% on FF++, 98.3% on Celeb-DF v2, and 76.7% on TIMIT. These results highlight the potential of our CViT2 model to effectively combat deepfakes and contribute to multimedia forensics.

The CViT2 model’s robustness and accuracy stem from its dual-component architecture. The Feature Learning component, based on the VGG architecture, extracts low-level features from face images. These features are then transformed by the Vision Transformer component, which uses multi-head self-attention and multi-layer perceptron blocks to detect deepfakes.

Our proposed model can detect diverse deepfake scenarios, making it a valuable tool for digital content verification and forensic applications.

To support further research and collaboration, we have open-sourced our model and code. This work represents a step forward in the fight against deepfakes, with broad applications in law enforcement, media integrity, and beyond. As we continue to refine our approach, we aim to expand our dataset and enhance the model’s generalizability, paving the way for more secure and reliable digital media environments.

The source code of CViT is available on GitHub.com.

The source code of CViT is available on GitHub.com.

Paper download: Improved Deepfake Video Detection Using Convolutional Vision Transformer