We present OpenDIBR, an applications that allows a viewer to freely move around in a scene reconstructed from camera captures (images/videos) and their corresponding depth maps. If you want to try out our real-time light field renderer for yourself, visit https://github.com/IDLabMedia/open-dibr for the code, datasets and clear guidelines. You can use your desktop display and keyboard, or your virtual reality headset. The GIFs below give you an idea of what to expect.

We are happy to share the associated published paper called “OpenDIBR: Open Real-Time Depth-Image-Based renderer of light field videos for VR” with you. It contains a detailed comparison to the state of the art, in terms of performance, visual quality and features.

The framework started from a need for view synthesis methods that satisfy two requirements:

- The synthesized view should be of high quality, even if the input cameras sparsely sample the scene, which is allowed to be large. This is difficult since alot of information about the scene will not be captured, which can lead to disocclusions and incorrect reflective/semi-transparent objects.

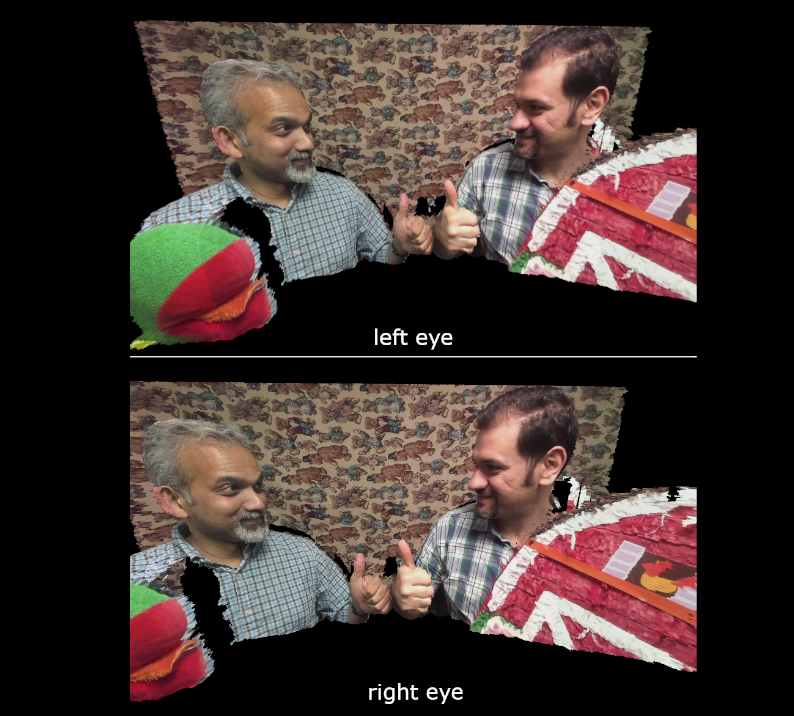

- The synthesis should be in real time, even in the highly demanding case of Virtual Reality and input videos. This means that at 30 frames per second, gigabytes of video data need to be streamed from disk to memory, and at 90 frames per second, two high-resolution images (one for each eye) need to be rendered.

We noticed that the state of the art is still far removed from this, even with the latest hardware at one’s disposal. We estimated however that a highly optimized depth-image-based renderer should be able to perform in real-time at a decent visual quality. OpenDIBR is the proof of concept, available to the wide public to test out and see how the different speed optimizations affect the visual quality.

OpenDIBR takes as input a set of images/videos taken by calibrated cameras, with their corresponding depth maps. The color and depth videos, saved as HEVC videos on disk, are decoded on the GPU through NVidia’s Video Codec SDK. OpenGL blazes through the typical 3D warping and blending step of the depth-image-based renderer.

The framerate of OpenDIBR can be significalty increased in many ways. For example, the number of triangles being rendered can be reduced. Or only a subset of the input images/videos can be processed to synthesize each new view. OpenDIBR choses this subset intelligently to minimize disocclusions while trying to keep correct reflective/semi-transparent objects.

In the paper, the visual quality of OpenDIBR is subjectively and objectively compared against that of instant-ngp NeRF and MPEG’s TMIV. On average, TMIV has a slightly higher quality than OpenDIBR, which is to be expected since it has no runtime constraints (and is much slower). NeRF tends to be much blurrier, since it needs more input images, but when OpenDIBR has a bad depth map, NeRF can outperform it. The performance of OpenDIBR is much closer to real-time though.

All code and instructions can be found on github.